君有此病何年瘳

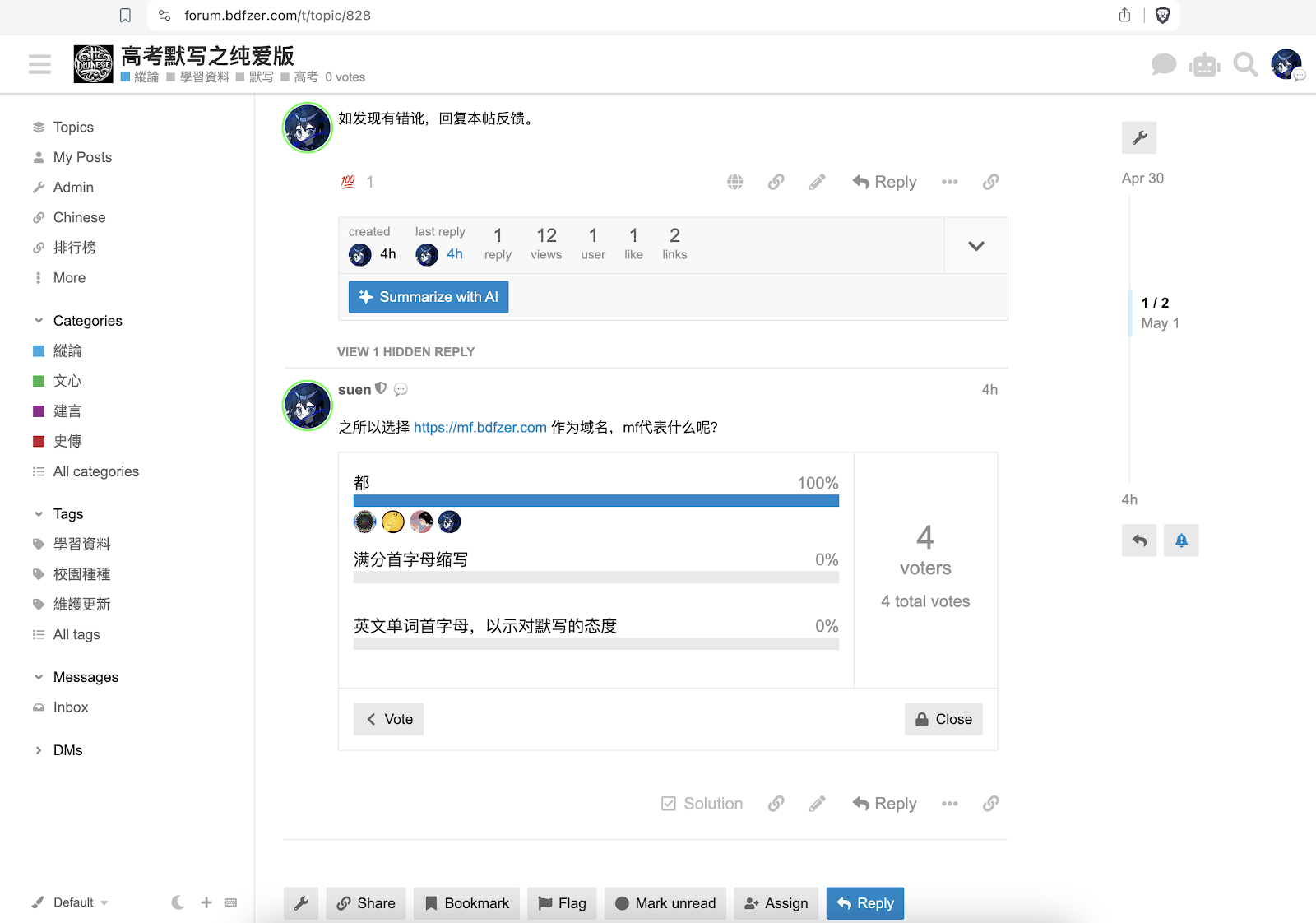

默寫,高考有八分,如果手寫是一種噁心,這個,無疑就是罪惡了。畢竟,從馴化的意義上,沒有比默寫更高效的玩意兒了。所以,趁五一假日,勞之動之,整之合之。搭建完高考必背古诗文網站:1、不做任何注释,仅解决高考默写需背诵的全部文字。2、新课标与课本要求背诵的文字,取合集。3、课标规定具体范围课本无要求者,取合集;同时将课标规定者标蓝。4、课标未规定具体范围课本标注者,取合集;同时将课本规定者标红。5、文字以现行部编本教材为准,课标有但现行部编本无的文字,以此前人教版教材为准。文本直接复制自现行官方教材,由GPT-4删除注释符号。6、因默写为手写,故网页使用简体书法字体。7、网站使用Googlesites,网址: 高考古诗文 2 ,(需翻墙)自定义域名: https://mf.bdfzer.com 2 (无需翻墙)1.0版本今日發布,此后逐步更新完善,对已考内容将逐步做标记,再附历年默写真题。各個網頁是一個個做的體力活,做完後順手爬蟲下自己,以便轉場。小爬蟲代碼公開:import requestsfrom bs4 import BeautifulSoupfrom urllib.parse import urljoin, urlparseimport osimport timeimport html2textdef get_all_website_links(url, domain_name, visited, max_depth=3): if max_depth <= 0 or url in visited: return set() visited.add(url) urls = set() try: response = requests.get(url, headers={‘User-Agent’: ‘Mozilla/5.0’}, timeout=10) response.raise_for_status() # time.sleep(1) # 可以调整或删除这行,根据目标网站的承载能力 except requests.RequestException as e: print(f"请求错误 {url}: {e}") return urls soup = BeautifulSoup(response.text, ‘html.parser’) for a_tag in soup.findAll(“a”): href = a_tag.attrs.get(“href”) if href == "" or href is None: continue href = urljoin(url, href) parsed_href = urlparse(href) href = parsed_href.scheme + “://” + parsed_href.netloc + parsed_href.path if href not in visited and domain_name in href: urls.add(href) urls.update(get_all_website_links(href, domain_name, visited, max_depth-1)) return urlsdef scrape_website(url): domain_name = urlparse(url).netloc visited = set() visited_urls = get_all_website_links(url, domain_name, visited) converter = html2text.HTML2Text() converter.ignore_links = False all_content = "" folder_path = ‘mf’ if not os.path.exists(folder_path): os.makedirs(folder_path) counter = 0 for link in visited_urls: print(f"正在处理:{link}") counter += 1 if counter % 10 == 0: print(f"已处理 {counter} 个链接…") try: response = requests.get(link, headers={‘User-Agent’: ‘Mozilla/5.0’}, timeout=10) response.raise_for_status() soup = BeautifulSoup(response.text, ‘html.parser’) title = soup.find(’title’).text.replace(’/’, ‘|’) markdown_content = converter.handle(response.text) filename = f"{folder_path}/{title}.md" with open(filename, ‘w’, encoding=‘utf-8’) as file: file.write(markdown_content) all_content += f"# {title}\n\n{markdown_content}\n\n" except requests.RequestException as e: print(f"处理链接时出错 {link}: {e}") with open(f’{folder_path}/all_content.md’, ‘w’, encoding=‘utf-8’) as file: file.write(all_content)start_url = “ https://mf.bdfzer.com/"scrape _website(start_url)